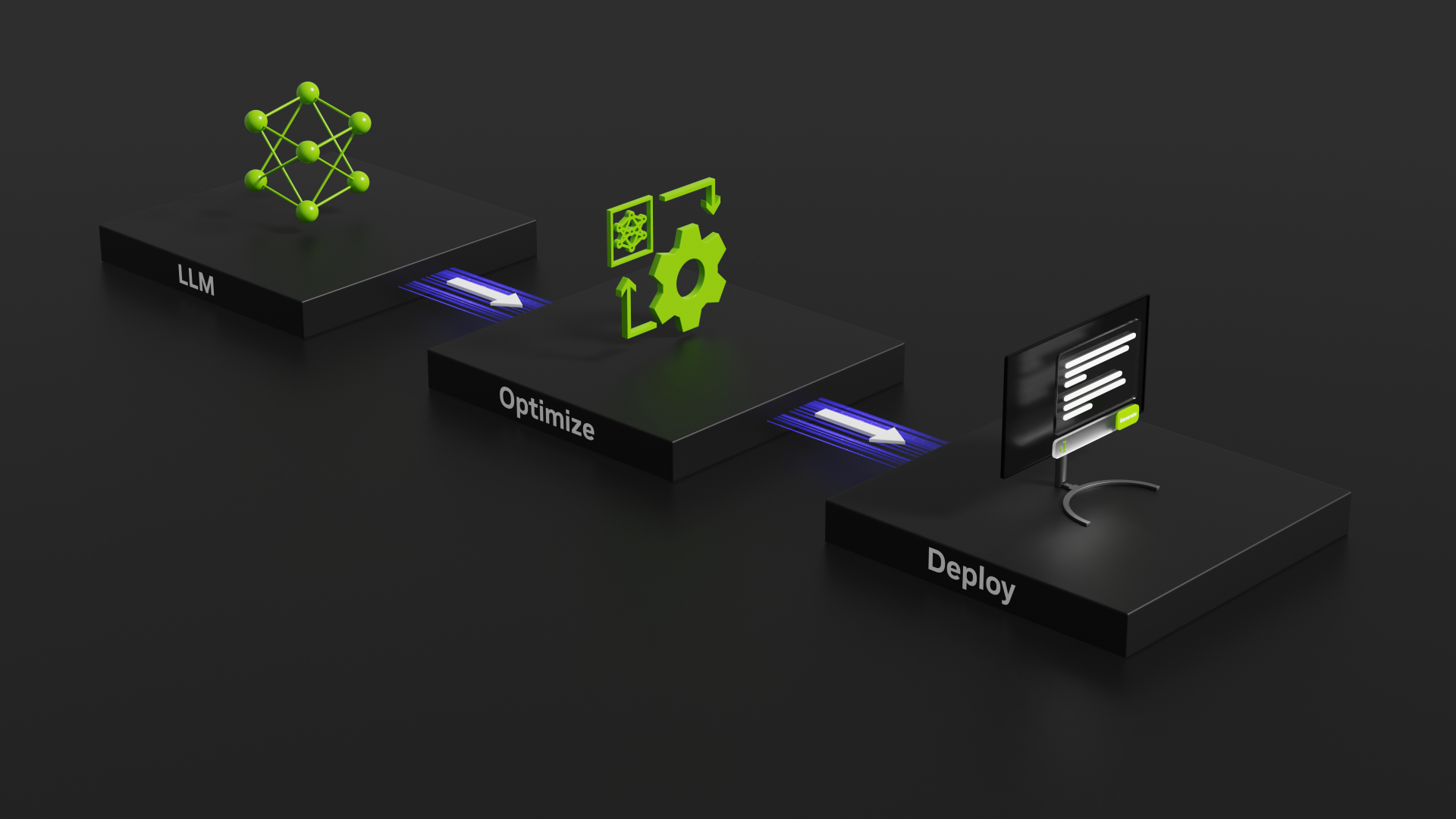

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Results and thoughts with regard to testing a variety of Stable Diffusion training methods using multiple GPUs.

For computing tasks like Machine Learning and some Scientific computing the RTX3080TI is an alternative to the RTX3090 when the 12GB of GDDR6X is sufficient. (Compared to the 24GB available of the RTX3090). 12GB is in line with former NVIDIA GPUs that were “work horses” for ML/AI like the wonderful 2080Ti.

This is a follow up post to “Quad RTX3090 GPU Wattage Limited “MaxQ” TensorFlow Performance”. This post will show you a way to have GPU power limits set automatically at boot by using a simple script and a systemd service Unit file.

Can you run 4 RTX3090’s in a system under heavy compute load? Yes, by using nvidia-smi I was able to reduce the power limit on 4 GPUs from 350W to 280W and achieve over 95% of maximum performance. The total power load “at the wall” was reasonable for a single power supply and a modest US residential 110V, 15A power line.

The GeForce RTX3070 has been released.

The RTX3070 is loaded with 8GB of memory making it less suited for compute task than the 3080 and 3090 GPUs. we have some preliminary results for TensorFlow, NAMD and HPCG.

The second new NVIDIA RTX30 series card, the GeForce RTX3090 has been released.

The RTX3090 is loaded with 24GB of memory making it a good replacement for the RTX Titan… at significantly less cost! The performance for Machine Learning and Molecular Dynamics on the RTX3090 is quite good, as expected.

The much anticipated NVIDIA GeForce RTX3080 has been released. How good is it with TensorFlow for machine learning? How about molecular dynamics with NAMD? I’ve got some preliminary numbers for you!

This is a short post showing a performance comparison with the RTX2070 Super and several GPU configurations from recent testing. The comparison is with TensorFlow running a ResNet-50 and Big-LSTM benchmark.

Does PCIe X16 give better performance than X8 for training models with Caffe when using cuDNN? Yes, but not by much!