Solutions for AI Development and Deployment

Each stage of developing AI models and agents requires progressively more computing power, and here at Puget Systems we have solutions for you every step of the way!

Select YourAI Deployment Stage

No matter which step of the AI development process you are in, we have systems designed to help you now as well as in your next phase of deployment!

If you have questions about what type of computer hardware your specific situation needs, our expert consultants are available to provide individualized guidance or a quote for a custom AI workstation or server.

Our Customers Include

View more of our customers here.

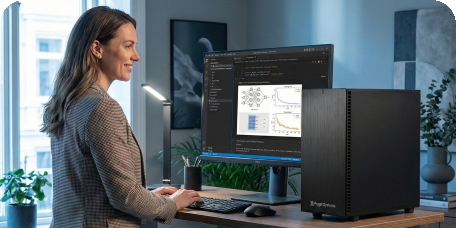

High-Performance Workstations and Servers for Developing, Piloting, Deploying, and Scaling AI Systems

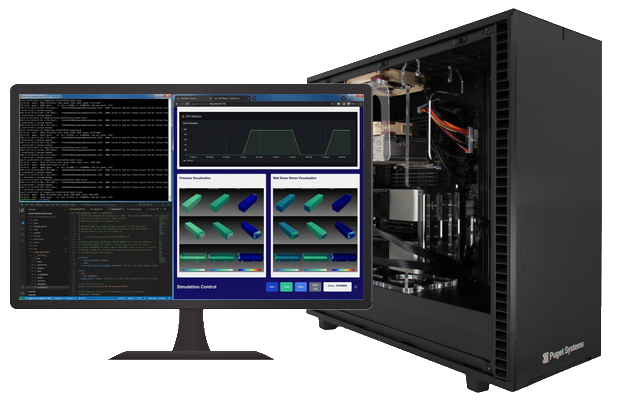

At Puget Systems, our workstation PCs and servers for AI development and deployment are crafted through a combination of our Puget Labs team’s expertise, benchmark testing, customer feedback, and the knowledge our consulting team has accumulated over the years.

Our goal is to make purchasing and owning computers a pleasure, not a hindrance to your work. We are here to help you throughout the process of developing your AI solutions, piloting them internally, deploying them across your team, and eventually scaling them out to your whole organization.

Talk to an Expert

We specialize in building workstation PCs, servers, and storage systems tailored for each of our customers. The best way we’ve found to accomplish that is to speak with you directly. There is no cost or obligation, and our no-pressure, non-commissioned consultants are experts at configuring a computer that will meet your specific needs. They are happy to discuss a quote you have already saved or guide you through each step of the process by asking a few questions about how you’ll be using your computer. There are several ways to start a conversation with us, so please pick what works best for you:

If you’d rather not wait, you can reach out to us via phone during our business hours.

Monday – Friday | 7am – 5pm (Pacific)

425-458-0273 | 1-888-784-3872