An introduction to NPU hardware and its growing presence outside of mobile computing devices.

Local alternatives to Cloud AI services

Presenting local AI-powered software options for tasks such as image & text generation, automatic speech recognition, and frame interpolation.

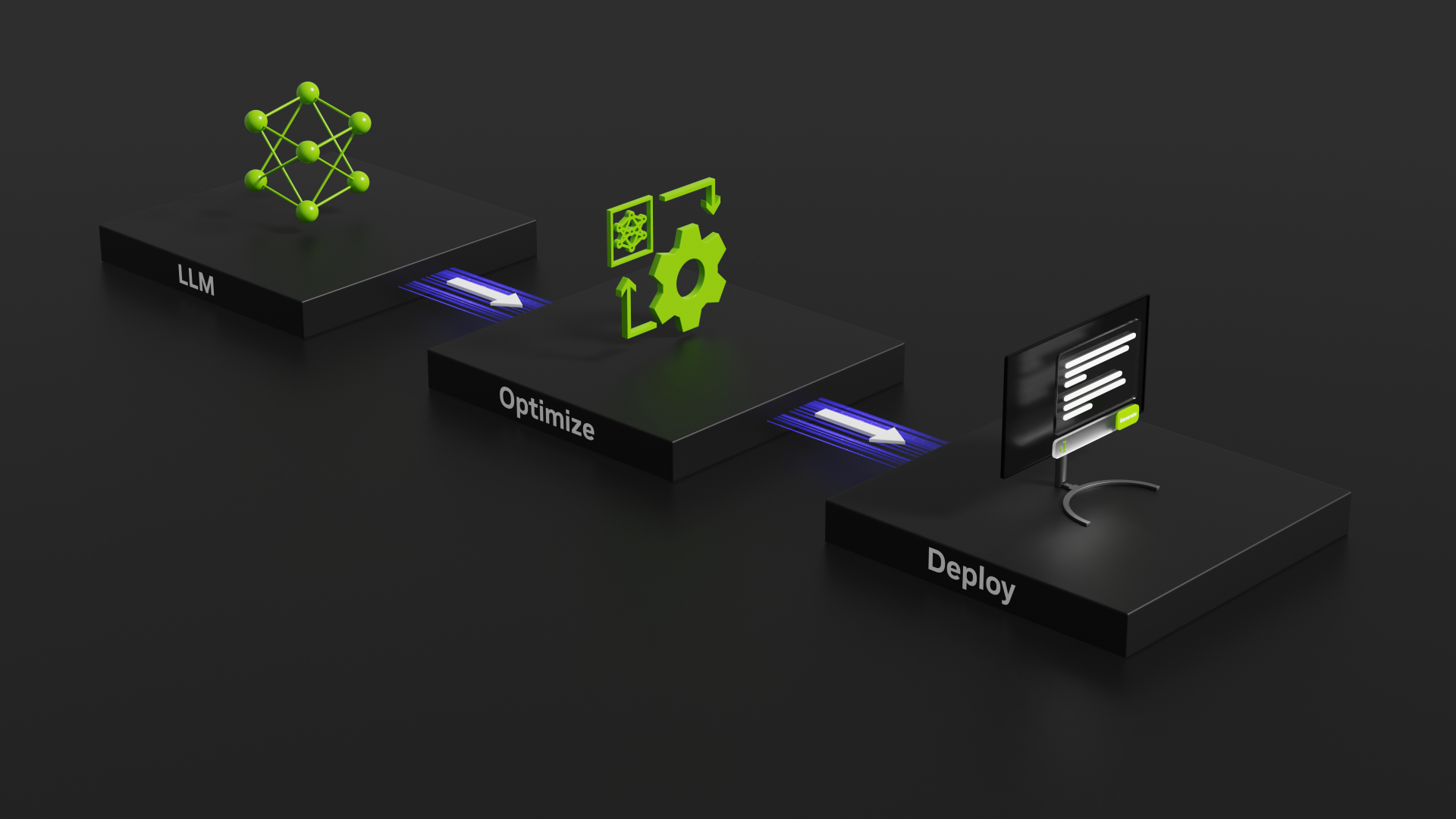

Benchmarking with TensorRT-LLM

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Note: How To Setup Apache on Ubuntu 22.04 For User public_html

This is a short note on setting up the Apache web server to allow system users to create personal websites and web apps in their home directories.

Molecular Dynamics Benchmarks GPU Roundup GROMACS NAMD2 NAMD 3alpha on 12 GPUs

We have a new collection of GPU accelerated Molecular Dynamics benchmark packages put together for GROMACS, NAMD 2, and NAMD 3-alpha10. (The benchmark packages will be available to the public soon.) In this post we present results for,

– 3 applications: GROMACS, NAND 2 and NAMD 3alpha10,

– 8 MD simulations,

– 12 different NVIDIA GPUs,

– 96 total results.

Self Contained Executable Containers Using Enroot Bundles

NVIDIA Enroot has a unique feature that will let you easily create an executable, self-contained, single-file package with a container image AND the runtime to start it up! This allows creation of a container package that will run itself on a system with or without Enroot installed on it! “Enroot Bundles”.

Run “Docker” Containers with NVIDIA Enroot

Enroot is a simple and modern way to run “docker” or OCI containers. It provides an unprivileged user “sandbox” that integrates easily with a “normal” end user workflow. I like it for running development environments and especially for running NVIDIA NGC containers. In this post I’ll go through steps for installing enroot and some simple usage examples including running NVIDIA NGC containers.

Does Enabling WSL2 Affect Performance of Windows 10 Applications

WSL2 offers improved performance over version 1 by providing more direct access to the host hardware drivers. Recent “Insider Dev Channel” builds of Win10 even allows access to the Windows NVIDIA display driver for GPU computing applications for WSL2 Linux applications! The performance improvements with WSL2 are largely because this version is running as a privileged virtual machine on to of MS Hyper-V. This means that at least low level support for the Hyper-V virtualization layer needs to be enabled to use it. In particular, the Windows feature “VirtualMachinePlatform” must be enabled for WSL2. We tested to see if there was any negative application performance impact.

How To Install CUDA 10.1 on Ubuntu 19.04

Ubuntu 19.04 will be released soon so I decided to see if CUDA 10.1 could be installed on it. Yes, it can and it seems to work fine. In this post I walk through the install and show that docker and nvidia-docker also work. I ran TensorFlow 2.0- alpha on Ubuntu 19.04 beta.

NAMD Custom Build for Better Performance on your Modern GPU Accelerated Workstation — Ubuntu 16.04, 18.04, CentOS 7

In this post I will be compiling NAMD from source for good performance on modern GPU accelerated Workstation hardware. Doing a custom NAMD build from source code gives a moderate but significant boost in performance. This can be important considering that large simulations over many time-steps can run for days or weeks. I wanted to do some custom NAMD builds to ensure that that modern Workstation hardware was being well utilized. I include some results for the STMV benchmark showing the custom build performance boost. I’ve included some results using NVIDIA 1080Ti and Titan V GPU’s as well as an “experimental” build using an Ubuntu 18.04 base.