Presenting local AI-powered software options for tasks such as image & text generation, automatic speech recognition, and frame interpolation.

Presenting local AI-powered software options for tasks such as image & text generation, automatic speech recognition, and frame interpolation.

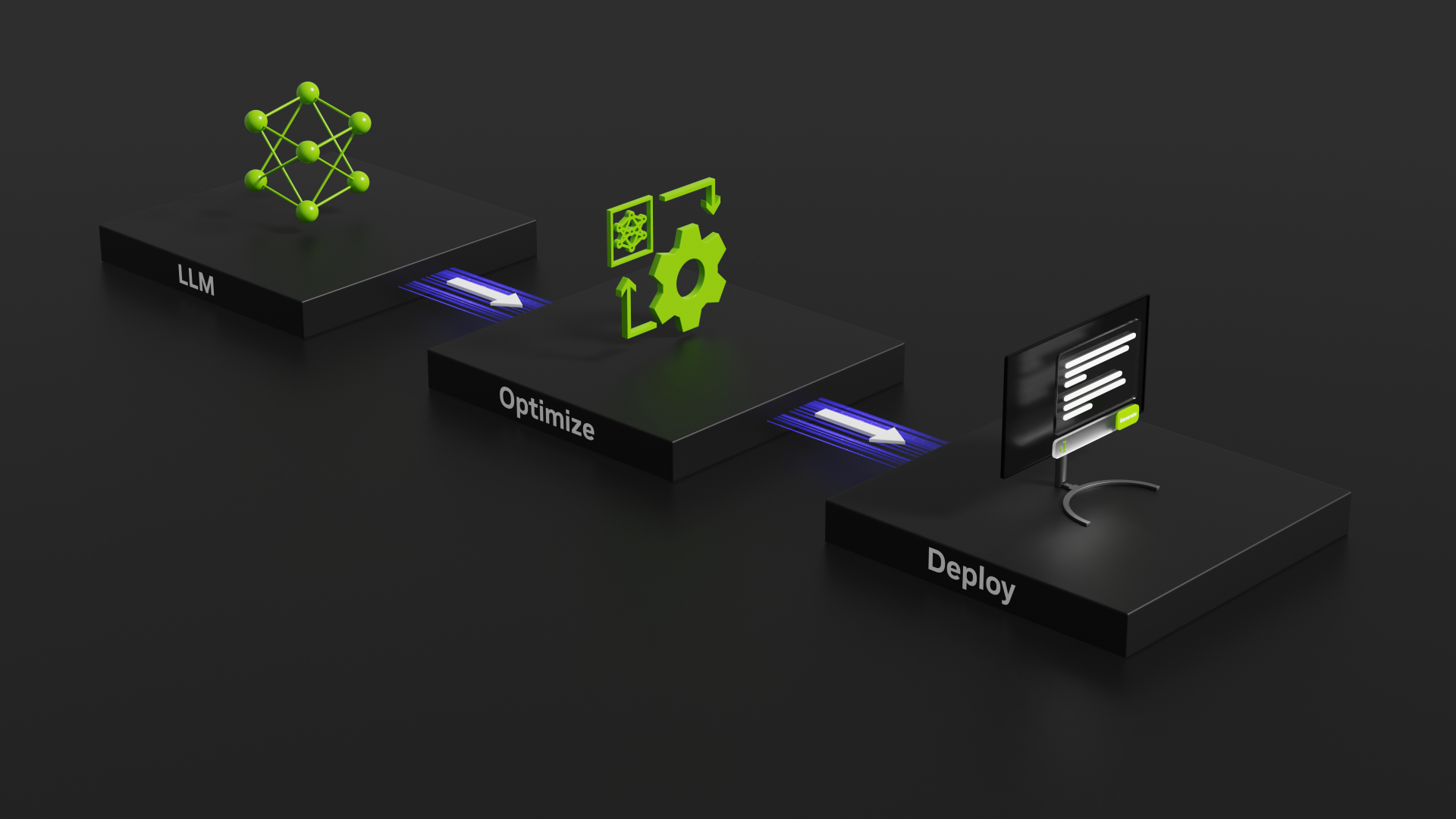

Evaluating the speed of GeForce RTX 40-Series GPUs using NVIDIA’s TensorRT-LLM tool for benchmarking GPU inference performance.

Results and thoughts with regard to testing a variety of Stable Diffusion training methods using multiple GPUs.

In this post address the question that’s been on everyone’s mind; Can you run a state-of-the-art Large Language Model on-prem? With *your* data and *your* hardware? At a reasonable cost?

This is just a short post to announce a more usable version of the NVIDIA GPU powerlimit setup script that I released a few months ago. This update to version 0.2 uses an interactive mode to set GPU powerlimits and optionally setup a systemd unit file to set these limits on subsequent reboots.

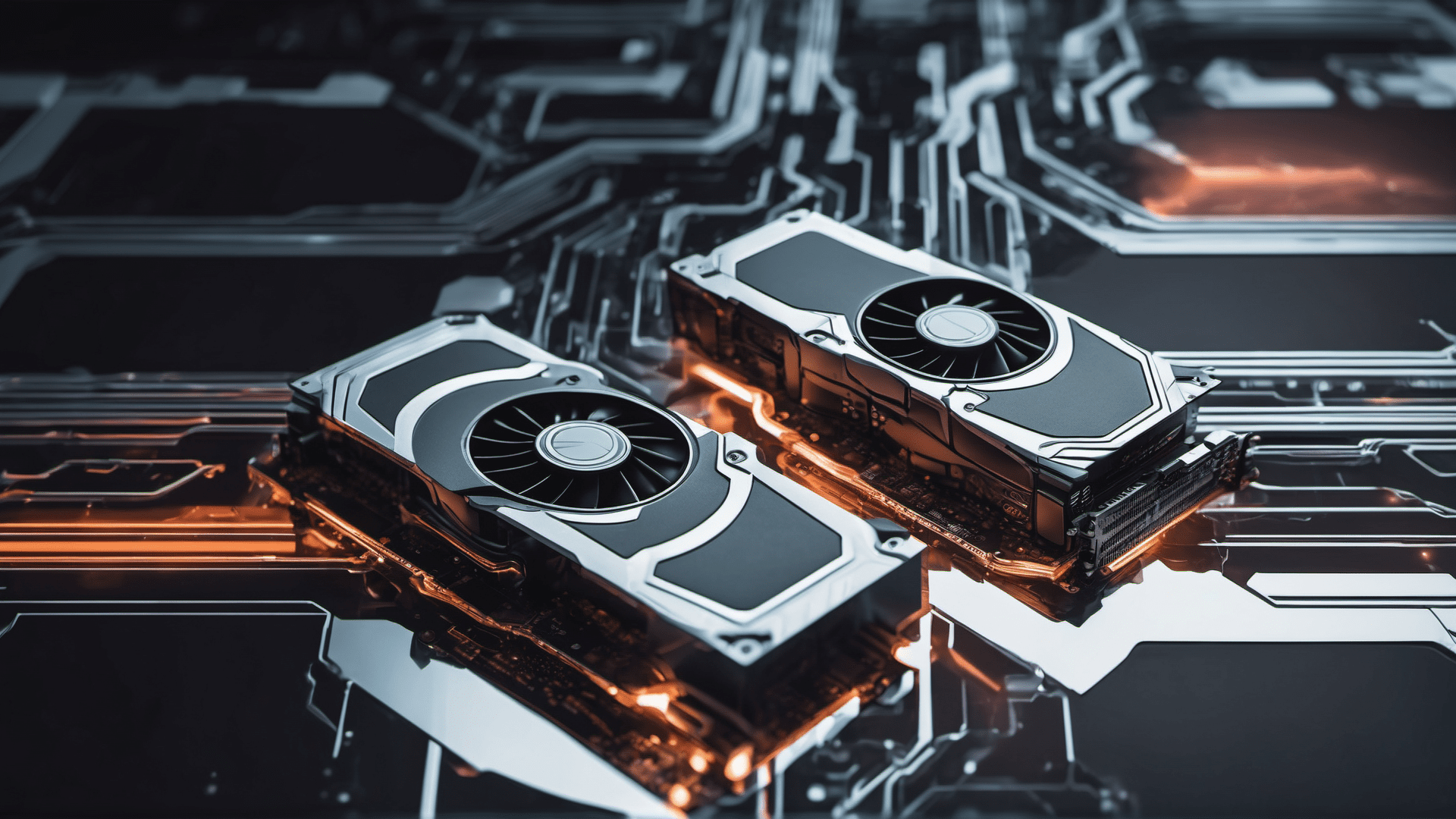

This post presents testing data showing that power-limit reduction on NVIDIA GPUs have give significant benefits for both high wattage and lower wattage GPUs. Power-limit vs Performance data is presented for 1-4 A5000 and 1-4 RTX3090 GPUs.

In this post I am referencing a Bash shell script I recently put together for setting up automatic NVIDIA GPU power-limit lowering at system boot. This allows a reliable way to configure and maintain multi-GPU systems for stable operation under heavy load.

NVIDIA Enroot has a unique feature that will let you easily create an executable, self-contained, single-file package with a container image AND the runtime to start it up! This allows creation of a container package that will run itself on a system with or without Enroot installed on it! “Enroot Bundles”.

For computing tasks like Machine Learning and some Scientific computing the RTX3080TI is an alternative to the RTX3090 when the 12GB of GDDR6X is sufficient. (Compared to the 24GB available of the RTX3090). 12GB is in line with former NVIDIA GPUs that were “work horses” for ML/AI like the wonderful 2080Ti.

Enroot is a simple and modern way to run “docker” or OCI containers. It provides an unprivileged user “sandbox” that integrates easily with a “normal” end user workflow. I like it for running development environments and especially for running NVIDIA NGC containers. In this post I’ll go through steps for installing enroot and some simple usage examples including running NVIDIA NGC containers.