Table of Contents

Introduction

I just confirmed that the RTX6000Ada performance issues were due to a bad motherboard on the test bed that was used. It’s still odd that it was just that MB plus 6000Ada that had any problems. We have confirmed that the Linux workloads I ran and the CPU rendering workloads on Windows are what we expect from this great GPU!

The issues with P2P on “2 x 4090s” is “resolved”. NVIDIA’s intention is for P2P to be unavailable on GeForce.

NVIDIA driver 525.105.17 has P2P “properly” disabled on GeForce.

I reran the tests from this post using this new driver and the results are as expected. I have added an “Appendix P2P Update” to the end of this post with the (brief) results from the updated testing.

I was prompted to do some testing by a commenter on one of my recent posts, NVIDIA RTX4090 ML-AI and Scientific Computing Performance (Preliminary). They had concerns about problems with dual NVIDIA RTX4090s on AMD Threadripper Pro platforms.

They pointed out the following links,

- Parallel training with 4 cards 4090 cannot be performed on AMD 5975WX, stuck at the beginning

- Standard nVidia CUDA tests fail with dual RTX 4090 Linux box

- DDP training on RTX 4090 (ADA, cu118)

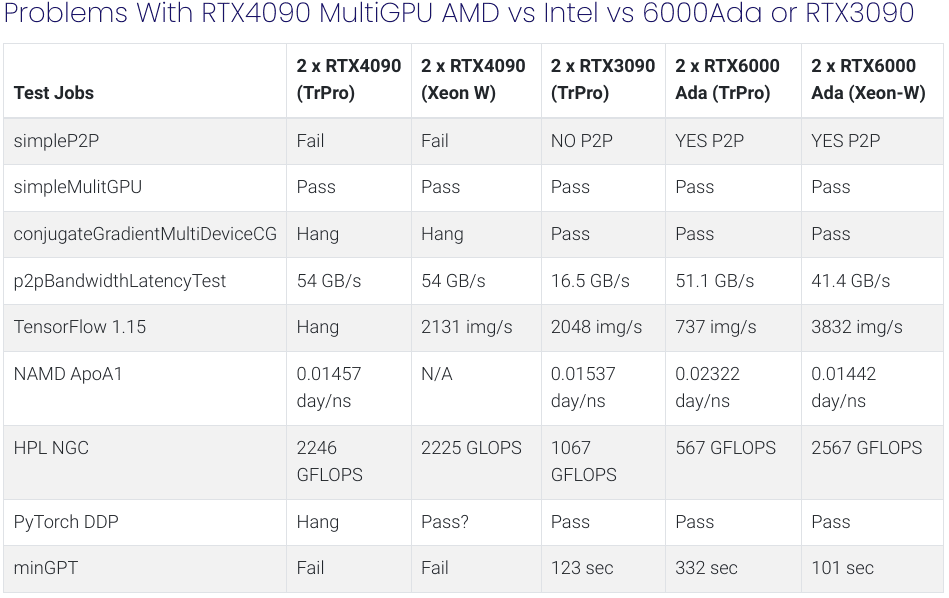

I ran some applications to reproduce the problems reported above and tried to dig deeper into the issues with more extensive testing. The included table below tells all!

Before we get to the table and testing of results and comments here are the hardware and software that were used for testing.

Test Hardware and Configurations

This is the most relevant component information.

AMD

- ASUS Pro WS WRX80E-SAGE SE WIFI

- BIOS 1003

- Ryzen Threadripper PRO 5995WX 64-Cores

- With GRUB_CMDLINE_LINUX=”amd_iommu=on iommu=pt”

Intel

- Supermicro X12SPA-TF

- BIOS 1.4a

- Xeon(R) W-3365 32-Cores

NVIDIA

- NVIDIA GeForce RTX 4090

- NVIDIA RTX 6000 Ada Generation

- NVIDIA GeForce RTX 3090

OS Info

- Ubuntu 22.04.1 LTS

- NVIDIA Driver Version: 525.85.05

- CUDA Version: 12.0

Test Applications

From NVIDIA CUDA 12 Samples

- simpleP2P

- simpleMulitGPU

- conjugateGradientMultiDeviceCG

- p2pBandwidthLatencyTest

From NVIDIA NGC Containers

- TensorFlow 1.15 ResNet50

- HPL (FP 64 Linpack performs many times faster on NVIDIA Compute GPUs but I still like to run this benchmark on GeForce and Pro GPUs)

- PyTorch DDP

Local install

- NAMD 2.14 ApoA1

- PugetBench-minGPT (Based on Andrej Karpathy’s minGPT uses PyTorch DDP)

Testing Results

Problems With RTX4090 MultiGPU AMD vs Intel vs 6000Ada or RTX3090

| Test Jobs | 2 x RTX4090 (TrPro) | 2 x RTX4090 (Xeon W) | 2 x RTX3090 (TrPro) | 2 x RTX6000 Ada (TrPro) | 2 x RTX6000 Ada (Xeon-W) |

|---|---|---|---|---|---|

| simpleP2P | Fail | Fail | NO P2P | YES P2P | YES P2P |

| simpleMulitGPU | Pass | Pass | Pass | Pass | Pass |

| conjugateGradientMultiDeviceCG | Hang | Hang | Pass | Pass | Pass |

| p2pBandwidthLatencyTest | 54 GB/s | 54 GB/s | 16.5 GB/s | 51.1 GB/s | 41.4 GB/s |

| TensorFlow 1.15 | Hang | 2131 img/s | 2048 img/s | 737 img/s | 3832 img/s |

| NAMD ApoA1 | 0.01457 day/ns | N/A | 0.01537 day/ns | 0.02322 day/ns | 0.01442 day/ns |

| HPL NGC | 2246 GFLOPS | 2225 GLOPS | 1067 GFLOPS | 567 GFLOPS | 2567 GFLOPS |

| PyTorch DDP | Hang | Pass? | Pass | Pass | Pass |

| minGPT | Fail | Fail | 123 sec | 332 sec | 101 sec |

Notes:

There are 2 major problems;

NVIDIA:

- It looks like there is a “partially” broken P2P functionality with 2 x 4090. Some jobs that use that either fail or are corrupt.

- However, P2P is available and shows good GPU-GPU bandwidth.

PyTorch distributed data-parallel (DDP) corrupts or hangs. It finishes/returns on Xeon-W but there is no success verification. - minGPT (also using DDP) corrupts and fails.

- Everything works as expected with 2 x 3090 on the AMD Tr Pro system.

- Everything works with 2 x 6000 Ada on both AMD Tr Pro and Intel Xeon-W systems but performance is very bad on the AMD Tr Pro system.

AMD:

- All the issues with 2 x 4090 are present on the AMD Tr Pro system and also on the Xeon-W except for the TensorFlow ResNet job run.

- On TrPro TensorFlow 1.15 ResNet50 2×4090 (using NV NCCL) hangs, It runs fine on Xeon.

- Performance is very bad with 2 x 6000 Ada on TrPro (GPU clock stays at 629MHz and power usage is low).

**The tests in the table were mainly for functionality rather than performance. But, I did use higher performance input parameters on the 2×6000 Ada testing on Xeon. Optimal performance input parameters were NOT used on the dual RTX4090 and RTX3090 job runs. Don’t use this post as a performance comparison!

NVIDIA and AMD were made aware of these test results 1 week before this post was published, but have not yet replied, as of the time of this post.

I do not have workarounds or fixes for these problems! The performance issues on the AMD platform are particularly troubling and we will do more troubleshooting to see if a solution to the issues can be found.

Conclusion

I hope that publishing these results will make the issues with RTX4090 multi GPU and AMD WRX80 motherboards more visible to the public. I also hope NVIDIA and AMD will be prompted to address the reported problems.

My testing was on Linux however, we have also seen issues with some of our Windows testing that is consistent. In particular differences between behavior between 2 x RTX4090 and 2 x 6000 Ada.

If fixes or workarounds are found they will be posted back here as notes at the top of the page.

The Appendix provides more detail on a few of the job run failures.

Appendix Select job output excerpts and comments

P2P

On both the AMD and Intel test platforms simpleP2P fails with a Verification error but p2pBandwidthLatencyTest shows increased bandwidth with P2P enabled. For example on the AMD platform,

simpleP2P

(cuda12-20.04)kinghorn@trp64:~/cuda-samples-12.0/bin/x86_64/linux/release$ ./simpleP2P

[./simpleP2P] - Starting...

Checking for multiple GPUs...

CUDA-capable device count: 2

Checking GPU(s) for support of peer to peer memory access...

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU1) : Yes

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU0) : Yes

Enabling peer access between GPU0 and GPU1...

Allocating buffers (64MB on GPU0, GPU1 and CPU Host)...

Creating event handles...

cudaMemcpyPeer / cudaMemcpy between GPU0 and GPU1: 25.07GB/s

Preparing host buffer and memcpy to GPU0...

Run kernel on GPU1, taking source data from GPU0 and writing to GPU1...

Run kernel on GPU0, taking source data from GPU1 and writing to GPU0...

Copy data back to host from GPU0 and verify results...

Verification error @ element 1: val = 0.000000, ref = 4.000000

Verification error @ element 2: val = 0.000000, ref = 8.000000

Verification error @ element 3: val = 0.000000, ref = 12.000000

Verification error @ element 4: val = 0.000000, ref = 16.000000

Verification error @ element 5: val = 0.000000, ref = 20.000000

Verification error @ element 6: val = 0.000000, ref = 24.000000

Verification error @ element 7: val = 0.000000, ref = 28.000000

Verification error @ element 8: val = 0.000000, ref = 32.000000

Verification error @ element 9: val = 0.000000, ref = 36.000000

Verification error @ element 10: val = 0.000000, ref = 40.000000

Verification error @ element 11: val = 0.000000, ref = 44.000000

Verification error @ element 12: val = 0.000000, ref = 48.000000

Disabling peer access...

Shutting down...

Test failed!bandwidthLatencyTest

...

Bidirectional P2P=Disabled Bandwidth Matrix (GB/s)

D\D 0 1

0 916.96 30.98

1 30.75 922.65

Bidirectional P2P=Enabled Bandwidth Matrix (GB/s)

D\D 0 1

0 918.04 54.12

1 54.12 923.09

...PugetBench-minGPT

minGPT fails with apparent data corruption on both AMD and Intel. This is using PyTorch DDP for multiGPU.

...

RuntimeError: probability tensor contains either `inf`, `nan` or element < 0Console screenshot on Tr Pro during HPL job run

This output clip shows the “stuck” GPU clock frequency and only a small fraction of the GPU power being used. on the AMD Tr Pro system.

!!! WARNING: Rank: 1 : trp64 : GPU 0000:61:00.0 Clock: 626 MHz Temp: 49 C Power: 70 W PCIe gen 4 x16

!!! WARNING: Rank: 0 : trp64 : GPU 0000:41:00.0 Clock: 626 MHz Temp: 50 C Power: 65 W PCIe gen 4 x16

!!! WARNING: Rank: 1 : trp64 : GPU 0000:61:00.0 Clock: 626 MHz Temp: 50 C Power: 70 W PCIe gen 4 x16

!!! WARNING: Rank: 0 : trp64 : GPU 0000:41:00.0 Clock: 626 MHz Temp: 50 C Power: 65 W PCIe gen 4 x16

Prog= 2.38% N_left= 71424 Time= 10.63 Time_left= 435.92 iGF= 557.23 GF= 557.23 iGF_per= 278.62 GF_per= 278.62

!!! WARNING: Rank: 0 : trp64 : GPU 0000:41:00.0 Clock: 626 MHz Temp: 51 C Power: 66 W PCIe gen 4 x16

Prog= 3.56% N_left= 71136 Time= 15.81 Time_left= 428.61 iGF= 565.41 GF= 559.91 iGF_per= 282.70 GF_per= 279.96

!!! WARNING: Rank: 1 : trp64 : GPU 0000:61:00.0 Clock: 626 MHz Temp: 50 C Power: 70 W PCIe gen 4 x16

!!! WARNING: Rank: 0 : trp64 : GPU 0000:41:00.0 Clock: 626 MHz Temp: 51 C Power: 65 W PCIe gen 4 x16

Prog= 4.72% N_left= 70848 Time= 20.85 Time_left= 420.54 iGF= 575.77 GF= 563.75 iGF_per= 287.89 GF_per= 281.87Appendix P2P Update

Driver

nvidia-smi

Thu Apr 13 20:26:31 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.105.17 Driver Version: 525.105.17 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+simpleP2P

[./simpleP2P] - Starting...

Checking for multiple GPUs...

CUDA-capable device count: 2

Checking GPU(s) for support of peer to peer memory access...

> Peer access from NVIDIA GeForce RTX 4090 (GPU0) -> NVIDIA GeForce RTX 4090 (GPU1) : No

> Peer access from NVIDIA GeForce RTX 4090 (GPU1) -> NVIDIA GeForce RTX 4090 (GPU0) : No

Two or more GPUs with Peer-to-Peer access capability are required for ./simpleP2P.

Peer to Peer access is not available amongst GPUs in the system, waiving test.conjugateGradientMultiDeviceCG

Starting [conjugateGradientMultiDeviceCG]...

GPU Device 0: "NVIDIA GeForce RTX 4090" with compute capability 8.9

GPU Device 1: "NVIDIA GeForce RTX 4090" with compute capability 8.9

Device=0 CANNOT Access Peer Device=1

Ignoring device 1 (max devices exceeded)p2pBandwidthLatencyTest

[P2P (Peer-to-Peer) GPU Bandwidth Latency Test]

Device: 0, NVIDIA GeForce RTX 4090, pciBusID: 41, pciDeviceID: 0, pciDomainID:0

Device: 1, NVIDIA GeForce RTX 4090, pciBusID: 61, pciDeviceID: 0, pciDomainID:0

Device=0 CANNOT Access Peer Device=1

Device=1 CANNOT Access Peer Device=0

Bidirectional P2P=Disabled Bandwidth Matrix (GB/s)

D\D 0 1

0 918.25 30.92

1 30.79 922.10

Bidirectional P2P=Enabled Bandwidth Matrix (GB/s)

D\D 0 1

0 919.12 31.06

1 30.84 923.19(tf1.15-ngc)kinghorn@trp64:/workspace/nvidia-examples/cnn$ mpiexec -np 2 –allow-run-as-root python resnet.py –layers=50 –batch_size=128 –precision=fp16

This job runs fine.

2601.8 images/sec../NAMD_2.14_Linux-x86_64-multicore-CUDA/namd2 +p128 +setcpuaffinity +idlepoll +devices 0,1 apoa1.namd

NAMD was as expected. It ran fine on the 2 GPUs.

Info: Benchmark time: 128 CPUs 0.00125643 s/step 0.014542 days/ns 1034.08 MB memorykinghorn@trp64:~/pugetbench-mingpt-linux-v0.1.1a$ ./pugetbench-mingpt -i 501 -b 64 –parallel

This result is very good. Uses PyTorch DDP.

****************************************************************

* Time = 76.54 seconds for 501 iterations, batchsize 64

****************************************************************CUDA_VISIBLE_DEVICES=0,1 mpirun –mca btl smcuda,self -x UCX_TLS=sm,cuda,cuda_copy,cuda_ipc -np 2 hpl.sh –dat ./HPL.dat –cpu-affinity 0:1 –cpu-cores-per-rank 4 –gpu-affinity 0:1

This job segfaults and I could not resolve that with ulimit adjustments

PROC COL NET_BW [MB/s ]

[trp64:09093] *** An error occurred in MPI_Sendrecv

[trp64:09093] *** reported by process [1928921089,1]

[trp64:09093] *** on communicator MPI_COMM_WORLD

[trp64:09093] *** MPI_ERR_COMM: invalid communicator

[trp64:09093] *** MPI_ERRORS_ARE_FATAL (processes in this communicator will now abort,

[trp64:09093] *** and potentially your MPI job)Happy computing! –dbk @dbkinghorn