Hardware Recommendations for Scientific Computing

Scientific computing is a vast domain with myriad individual applications that all have their own specific hardware needs, but the guidance here will get you started in the right direction for your next workstation.

Puget Labs Certified

These hardware configurations have been developed and verified through frequent testing by our Labs team. Click here for more details.

Scientific Computing System Requirements

Quickly Jump To: Processor (CPU) • Video Card (GPU) • Memory (RAM) • Storage (Drives)

Scientific Computing is a vast domain! There are thousands of “scientific” applications and it is often the case that what you are working with is based on your own code development efforts. Performance bottlenecks can arise from many types of hardware, software, and job-run characteristics. Recommendations on “system requirements” published by software vendors (or developers) may not be ideal. They could be based on outdated testing or limited configuration variation. However, it is possible to make some general recommendations. The Q&A discussion below, with answers provided by Dr. Donald Kinghorn, will hopefully prove useful. We also recommend that you visit his Puget Systems HPC blog for more info.

Processor (CPU)

The CPU may be the most important consideration for a scientific computing workstation. The best choice will depend on the parallel scalability of your application, memory access patterns, and whether GPU acceleration is available or not.

What CPU is best for scientific computing?

There are two main choices: Intel Xeon (single or dual socket) and AMD Threadripper PRO / EPYC (which are based on the same technology). For the majority of cases our recommendation is single socket processors like Xeon-W and Threadripper PRO. Current versions of these CPUs offer options with high core counts and large memory capacity without the need for the complexity, expense, and memory & core binding complications of dual socket systems.

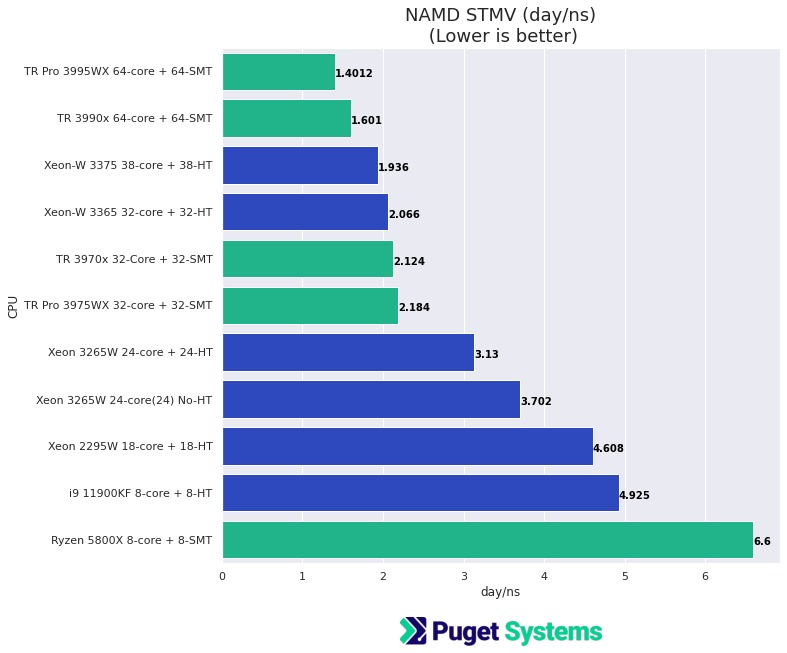

Do more CPU cores make scientific computing faster?

This depends on two main factors:

- The parallel scalability of your application

- The memory-bound character of your application.

It is always good to understand how well your job runs will scale. To better understand that, check out the info about Amdhal’s Law in our HPC blog.

Also, if your application is memory-bound then it will be limited by memory channels and may give best performance with fewer cores. However, it is often an advantage to have many cores to provide more available higher level cache – even if you use less than half of the available cores on a regular basis. For a well scaling application, an Intel or AMD 32-core CPU will likely give good, balanced hardware utilization and performance.

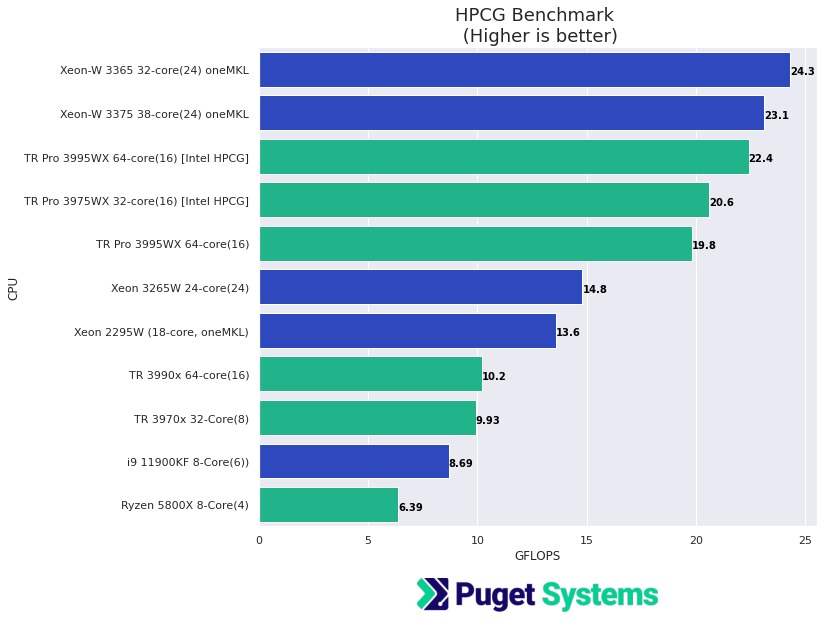

Does scientific computing work better with Intel or AMD CPUs?

Either Intel Xeon or AMD Threadripper PRO processors are excellent. To help determine which is best for you, one thing to keep in mind is that Intel Xeons have AVX512 vector units vs AVX2 (256) vector units on AMD. If your application is linked to Intel MKL then AVX512 can have a significant advantage. However using AVX512 comes a the cost of lowering system clocks when it is in use to maintain power limits. AVX2 offers good vectorization performance and a well balanced overall operation flow that is often just as good in “the real world”. However, if your application is specifically linked with Intel MKL then an Intel CPU is a good choice.

Why are Xeon or Threadripper PRO recommended rather than more “consumer” level CPUs?

The most important reason for this recommendation is memory channels. Both Intel Xeon W-3300 and AMD Threadripper Pro 3000 Series support 8 memory channels, which can have a significant impact on performance for many scientific applications. Another consideration is that these processors are “enterprise grade” and the overall platform is likely to be robust under heavy sustained compute load.

Video Card (GPU)

If your application has GPU (graphics processing unit) acceleration then you should try to utilize it! Performance on GPUs can be many times greater than on CPUs for highly parallel calculations.

What GPU (video card) is best for scientific visualization?

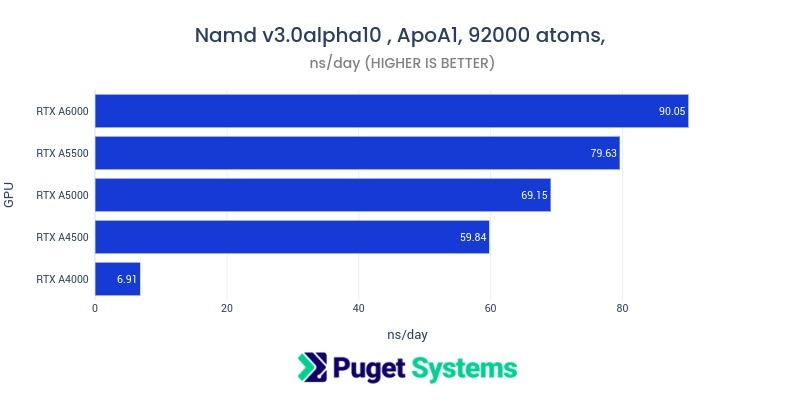

If your use for the GPU is scientific visualization, then a good recommendation is a higher end NVIDIA RTX A-series card like the A4000 or A5000. If you are working with video data, very large images, or visual simulation then the 48GB of memory on the A6000 may be an advantage. For a typical desktop display, lower end NVIDIA professional series GPUs like the A2000 may be plenty. NVIDIA’s “consumer” GeForce GPUs are also an option. Anything from the RTX 4060 to RTX 4090 are very good. These GPUs are also excellent for more demanding 3D display requirements. However, it is a good idea to check with the vendor or developer of the software you are using to see if they have specific requirements for “professional” GPUs.

What video cards are recommended for GPU compute acceleration?

There are a few considerations. Do you need double precision (FP64) for your application? If so, then you are limited to the NVIDIA compute series – such as the A100 or A30. These GPUs are passively cooled and are suitable for use in rack mounted chassis with the needed cooling capability. None of the RTX GPUs, consumer or professional, have good double precision support.

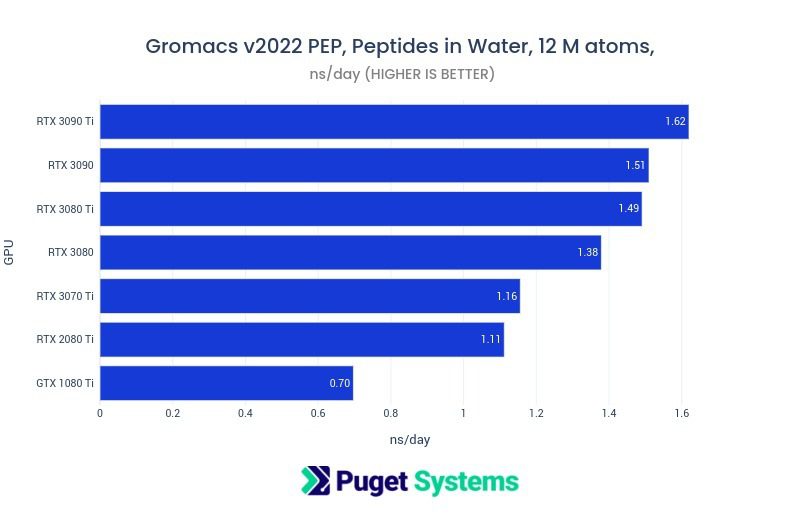

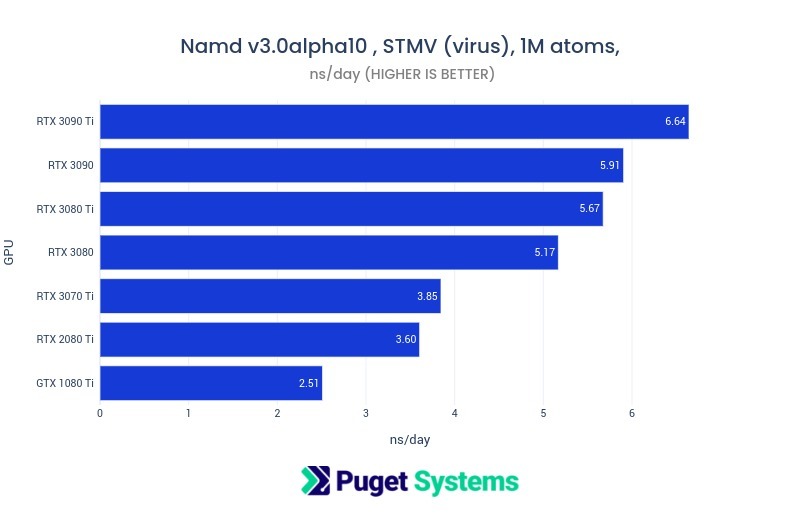

Fortunately, many scientific applications that have GPU acceleration work with single precision (FP32). In this case the higher end RTX GPUs offer good performance and relatively low cost. GPUs like the A5000 and A6000 are high quality and work well in multi-GPU configurations. Consumer GPUs like RTX 3080 Ti and 3090 can give very good performance but may be difficult to configure in a system with more than two GPUs because of cooling design and physical size.

In addition to the considerations already mentioned, memory size may be important is in general can be a limiting factor in the use of GPUs for compute.

How much VRAM (video memory) does scientific computing need?

This can vary depending on the application. Many applications will give good acceleration with as little as 12GB of GPU memory. However, if you are working with large jobs or big data sets then 24GB (A5000, RTX 3090) or 48GB (A6000) may be required. For the most demanding jobs, NVIDIA’s A100 compute GPU comes in 40 and 80GB configurations.

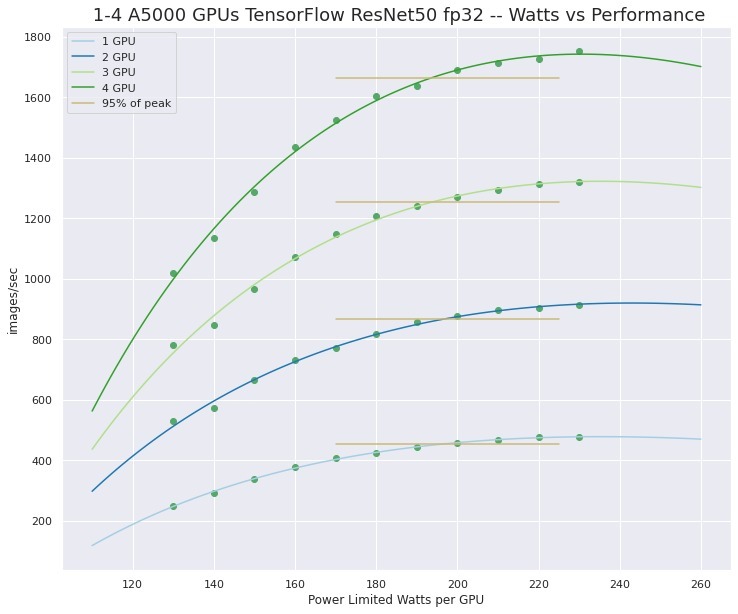

Will multiple GPUs improve performance in scientific computing?

Again this will depend on the application. Multi-GPU acceleration is not automatic just because you have more than one GPU in your system. The software has to support it. However, if your application scales well by distributing data or work across the GPUs, perhaps with Horovod (MPI), then a multi-GPU workstation can offer performance approaching that of a supercomputer of the not too distant past.

Does scientific computing need a “professional” video card?

Not necessarily – many important scientific calculations have been done on NVIDIA consumer GPUs. However, there are definitely things to consider – which we covered in more detail in the preceeding questions (above).

Does scientific computing run better on NVIDIA or AMD GPUs?

Thanks to their development of CUDA, and the numerous applications that use it, NVIDIA GPUs are currently the standard for scientific computing. While nearly all GPU accelerated applications are built with CUDA, there is some usage of openCL (which is supported on AMD and NVIDIA GPUs) and there are utilities such as AMD’s own ROCm. However, those are not widely used and can be difficult to configure. This situation will certainly change as more work is done with the newly deployed AMD GPU-accelerated supercomputers and Intel’s entry into the GPU compute acceleration realm.

When is GPU acceleration not appropriate for scientific computing?

If you can use GPU acceleration then you probably should. However, if your application has memory demands exceeding that of GPUs or the high cost of NVIDIA compute GPUs is prohibitive then a many-core CPU may be appropriate instead. Of course if your application is not specifically written to support GPUs then there is no magic to make it work. Needing double precision accuracy for your calculations will also limit you either CPUs or NVIDIA compute-class GPUs, the latter of which are generally not suitable in a workstation and incur a high cost (but potentially offer stellar performance).

Memory (RAM)

Memory performance and capacity are very important in many scientific applications. In fact, memory bandwidth will be the chief bottleneck in memory-bound programs. Applications that involve “solvers” for simulations may be doing solutions of differential equations that are often memory bound. This ties in with our recommendation of CPUs that provide 8 memory channels.

How much RAM does scientific computing need?

Since there are so many potential applications and job sizes this is highly dependent on the specific use case. It is fortunate that modern Intel and AMD workstation platforms support large memory configurations even in single socket systems. For workflows focused on CPU-based calculations, 256 to 512GB is fairly typical – and even 1TB is not unheard of.

How much system memory do I need if I’m using GPU acceleration?

There general guidance on this. It is highly recommended that the system be configured with at least twice as much system memory (RAM) as there is total GPU memory (VRAM) across all cards. For example, a system with two RTX 3090 GPUs (2 x 24 = 48GB total VRAM) should have at least 96GB, but more commonly 128GB of system memory. That is more than twice the amount, but is a typical memory module configuration (unlike 96GB). This system memory recommendation is to ensure that memory can be mapped from GPU space to CPU space and to provide staging and buffering for instruction and data transfer without stalling.

Storage (Hard Drives)

Storage is one of those areas where going with “more than you think you need” is probably a good idea. The actual amount will depend on what sort of data you are working with. It could range from a few 10’s of gigabytes to several petabytes!

What storage configuration works best for scientific computing?

A good general recommendation is to use a highly performant NVMe drive of capacity 1TB as the main system drive – for the OS and applications. You may be able to configure additional NVMe storage for data needs, however there are larger capacities available with “standard” (SATA based) SSDs. For very large storage demands, older style platter drives can offer even higher capacity. For exceptionally large demands, external storage servers may be the best option.

My application recommends configuring a “scratch space”, what should I use?

Scratch space has often been a configuration in quantum chemistry applications for storing integrals. There are other applications that also are built expecting an available scratch space. For these cases, an additional NVMe drive would be a very good option. However, if there is a configuration option in the software to avoid using out-of-core scratch then that may be the best option. The need for scratch space was common when system memory capacity was small. It is likely much better to increase your RAM size if it will let you avoid scratch space, as memory is orders of magnitude faster than even high-speed SSDs.

Should I use network attached storage for scientific computing?

Network-attached storage is another consideration. It’s become more common for workstation motherboards to have 10Gb Ethernet ports, allowing for network storage connections with reasonably good performance without the need for more specialized networking add-ons. Rackmount workstations and servers can have even faster network connections, often using more advanced cabling than simple RJ45, making options like software-defined storage appealing.