Table of Contents

Introduction

There are a lot of choices to make when configuring a workstation, especially when it comes to which hardware will comprise the build. But it’s just as important to consider what software your system will be running. That’s precisely why Puget Systems tests a wide variety of software packages: so that we can make informed hardware recommendations based on a given workflow. One particular software choice that may be taken for granted is which Operating System (OS) to install on a system. It comes as little surprise that Windows continues to be a popular choice for professional workstations, and in 2023, about 90% of Puget Systems customers purchased a Windows-based system. Today, we’ll discuss the benefits and drawbacks of Windows-based workstations compared to Linux-based systems, specifically with regard to Stable Diffusion workflows, and provide performance results from our testing across various Stable Diffusion front-end applications.

We will discuss some other considerations with regard to the choice of OS, but our focus will largely be on testing performance with both an NVIDIA and an AMD GPU across several popular SD image generation frontends, including three forks of the ever-popular Stable Diffusion WebUI by AUTOMATIC1111.

Resource Usage

One of the common reasons for using Linux for AI is that it tends to consume less VRAM than Windows during normal usage. With a 4K screen and a single browser window open, we can expect Windows to reserve just over 1GB of VRAM. In comparison, Ubuntu’s default desktop environment, GNOME, uses about 700MB under the same conditions. Although that’s not a huge difference, it could be enough to prevent errors or performance drops from VRAM overflows in certain circumstances. Additionally, unlike Windows, a Linux-based OS also gives us the opportunity to run without a GUI at all, effectively dropping the VRAM usage to zero, which is great if you have a mid or low-end GPU and need to squeeze every last bit of VRAM out of it as you can. Although there are plenty of options for generating images via the command line, in practice, this would likely mean using two systems: one to run the SD backend as a server and another to connect to the front-end image generation GUI via a browser.

Optimizations

Towards the end of 2023, a pair of optimization methods for Stable Diffusion models were released: NVIDIA TensorRT and Microsoft Olive for ONNX runtime. Both of these options operate under the basic principle of converting SD checkpoints into quantized versions optimized for inference, resulting in improved image generation speeds. Because these optimizations were designed for the ONNX runtime, they are generally available regardless of which OS you choose. However, most implementations of Olive are designed for use with DirectML, which relies on DirectX within Windows. For example, Microsoft’s extension for AUTOMATIC1111’s SD-WebUI. We covered these optimizations in a previous article if you want a head-to-head comparison.

Although these optimizations are guaranteed to increase SD inference performance, they have drawbacks. First and foremost, any checkpoints and LoRAs must first be quantized to benefit from the improved performance. This isn’t much of a problem on its own, as the quantization process typically only takes at most a few minutes. However, other variables must be considered during the conversion. In the case of TensorRT, the optimized model must also account for the expected width and height of the generation, along with batch sizes. Improvements have been made in this area with the introduction of “dynamic engines” that can accept a broader range of resolutions but reportedly come with impacts on performance and VRAM usage. In the case of Microsoft Olive, LoRA support is more limited, and they must be baked into the checkpoint during quantization.

For someone with a well-defined image generation workflow without much variation, these downsides may not be a problem, and the performance benefits may be well worth the initial setup time. However, for those who frequently experiment with various checkpoints, LoRAs, and image resolutions, the relative inflexibility of these optimizations makes them far less appealing.

Test Setup

Threadripper PRO Test Platform

| CPU: AMD Ryzen Threadripper PRO 7985WX 64-Core |

| CPU Cooler: Asetek 836S-M1A 360mm Threadripper CPU Cooler |

| Motherboard: ASUS Pro WS WRX90E-SAGE SE BIOS Version: 0404 |

| RAM: 8x Kingston DDR5-5600 ECC Reg. 1R 16GB (128GB total) |

| GPUs: AMD Radeon RX 7900 XTX Driver Version: 23.Q4 (Windows) Driver Version: 6.3.6 (Ubuntu) NVIDIA GeForce RTX 4080 Driver Version: 551.76 (Windows) Driver Version: 545.29.06 (Ubuntu) |

| PSU: Super Flower LEADEX Platinum 1600W |

| Storage: Samsung 980 Pro 2TB (Windows) Samsung 980 Pro 1TB (Ubuntu) |

| OS: Ubuntu 22.04.3 LTS 6.5.0-26-generic Windows 11 Pro 23H2 Build 22631.2396 |

Generation Data

| Prompt: photo of a serene lake reflecting milky way at night Model: stable-diffusion-xl-base-1-0 Resolution: 1024×1024 Scheduler: Euler Sampler: Normal Steps: 20 |

Preceding each test was a quick warmup where a single image was generated to ensure everything was loaded and the pipeline was ready to go. Then, two tests were performed, the first consisting of a batch count of 10 images and batch size 1, and the second being a batch count of 1 and batch size 4. Each test was performed twice, and the results were averaged.

All Windows tests were performed with hardware-accelerated GPU scheduling (HAGS) on and off. Additionally, all tests with the NVIDIA hardware were performed with both xFormers and SDP cross-attention optimizations. As of the writing of this article, xFormers is not available for AMD GPUs, so only SDP was used in the AMD testing.

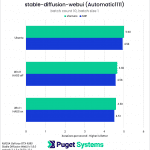

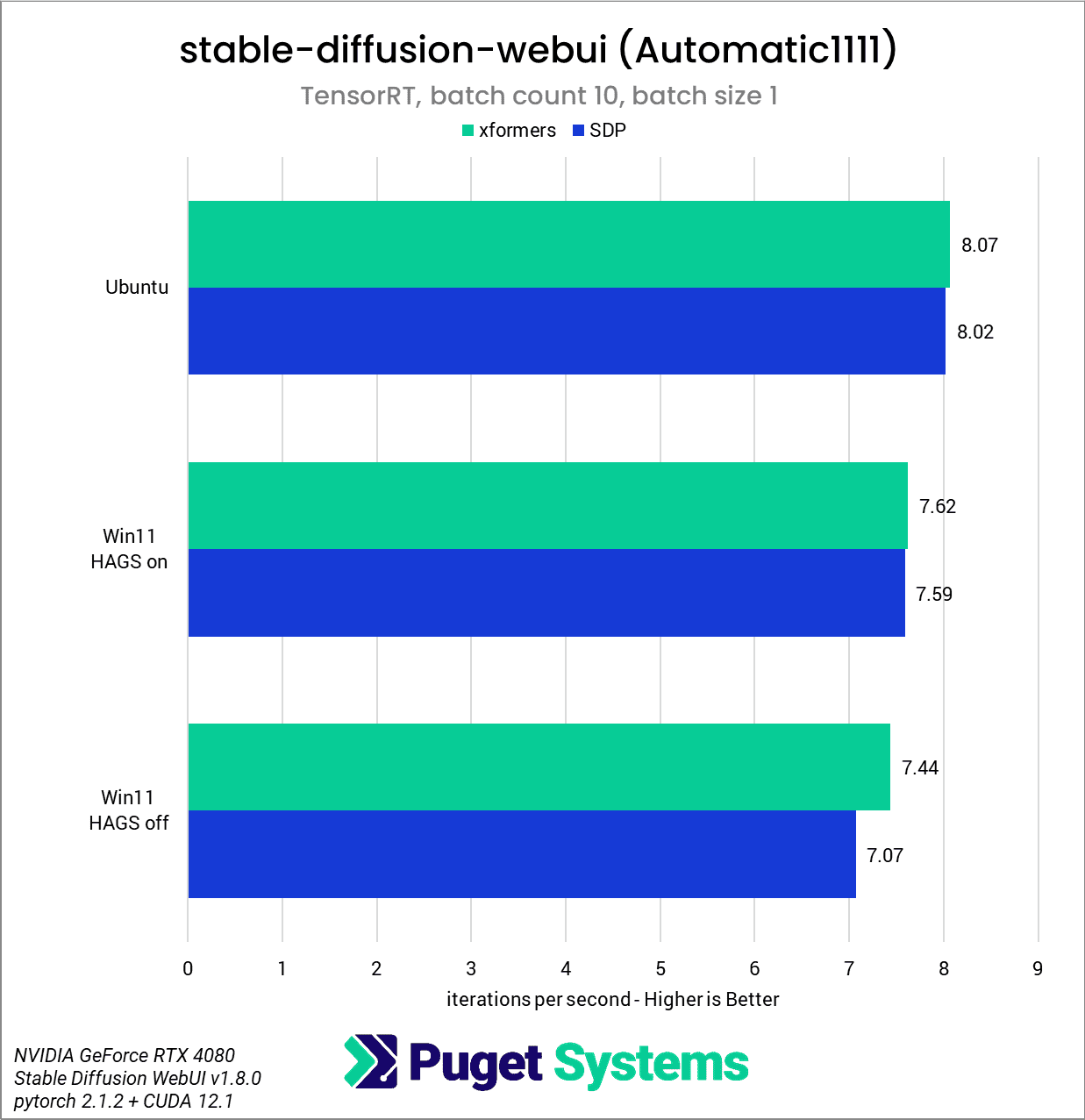

SD-WebUI – NVIDIA

Starting with the ubiquitous SD-WebUI, Ubuntu takes the performance lead, outperforming the Windows equivalent by about 5-8% in both the standard tests and with TensorRT. We also found a slight performance boost with HAGS disabled in all tests, except within the batch count 10 test using TensorRT. Interestingly, the SD-WebUI tests were the only time xFormers outperformed SDPA, as further results will reveal below.

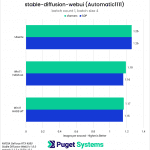

SD-WebUI-DirectML (fork) – AMD

A new option for AMD GPUs is ZLUDA, which is a translation layer that allows unmodified CUDA applications on AMD GPUs. However, the future of ZLUDA is unclear as the CUDA EULA forbids reverse-engineering CUDA elements for translation targeting non-NVIDIA platforms.

We decided to run some tests, and surprisingly, we found several instances where ZLUDA within Windows outperformed ROCm 5.7 in Linux, such as within the DirectML fork of SD-WebUI. Compared to other options, ZLUDA does not appear to be meaningfully impacted by the presence of HAGS.

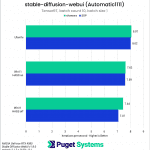

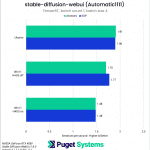

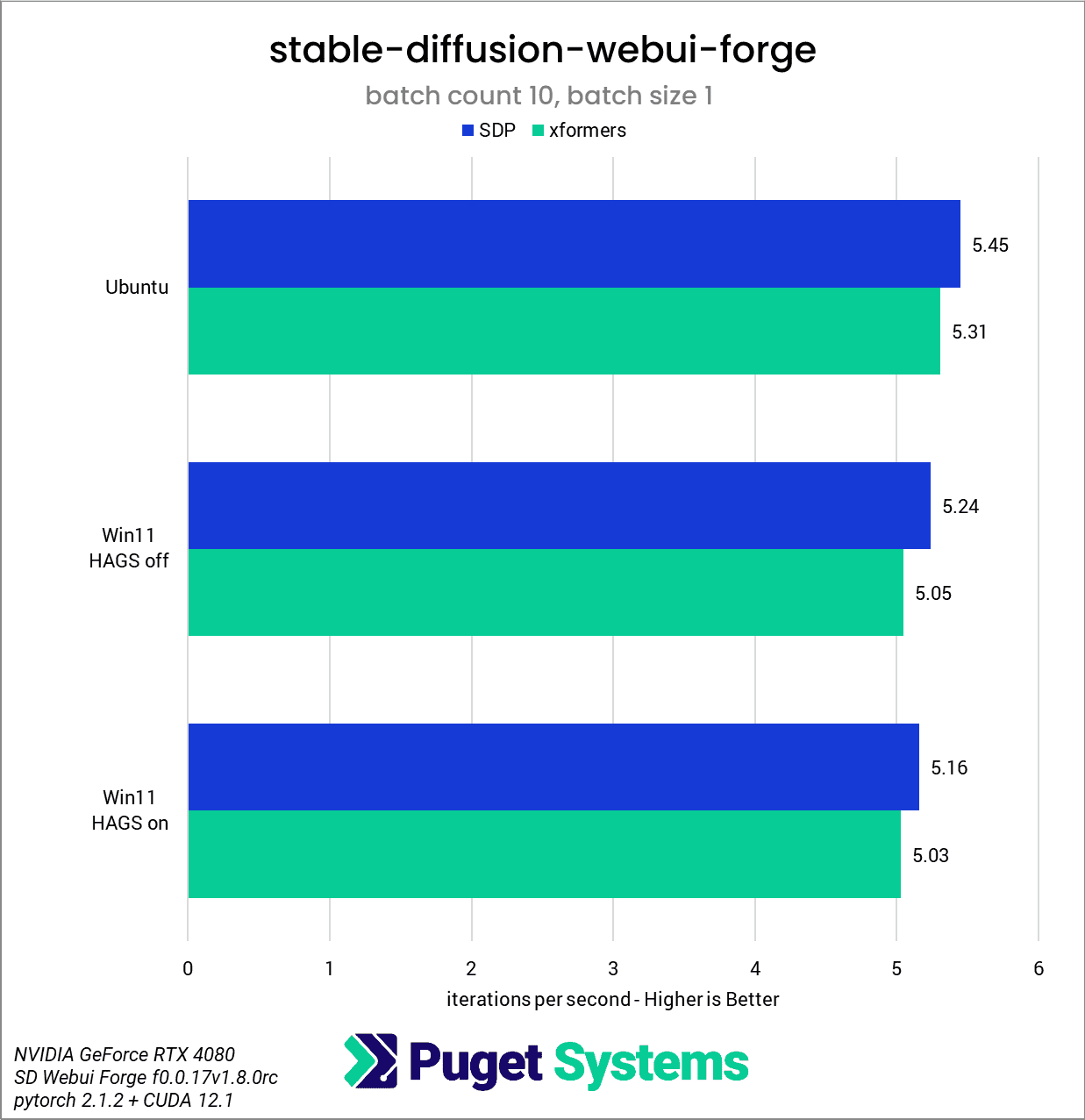

SD-WebUI-Forge (fork) – NVIDIA

With SD-WebUI-Forge, we will start establishing a pattern that continues fairly consistently throughout the rest of the results: that Ubuntu with SDP cross attention is the overall performance winner and that within Windows, disabling HAGS has a slight benefit to performance. Though it’s worth keeping in mind that the difference in OS only represents an average 4-5% increase in iterations per second. Forge also achieved the most iterations per second overall, which is fitting considering it’s presented as being a more performant version of SD-WebUI.

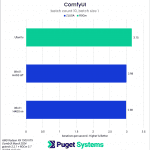

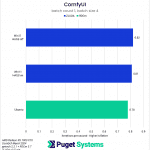

ComfyUI

In ComfyUI, the results were quite close between Ubuntu and Windows. Ubuntu came out slightly ahead when SDPA was used, but it also received the worst result of all when using xFormers. This represents the only performance reduction we found in Ubuntu compared to Windows. Just as we saw in the SD-WebUI test, disabling HAGS led to a slight performance improvement.

On the AMD side (charts 3-4), Ubuntu + ROCm came out ahead by about 5% in the batch count 10 test but fell behind when the batch size was increased to 4. Once again, ZLUDA did not appear to be significantly affected by the HAGS option.

Fooocus

Within Fooocus, the combination of Ubuntu and SDPA comes out ahead overall. The impact of HAGS is less clear, with a notable decrease in performance with xFormers. Fooocus does not appear to support image generation with batch sizes larger than 1, so we only have results for the batch count 10 test.

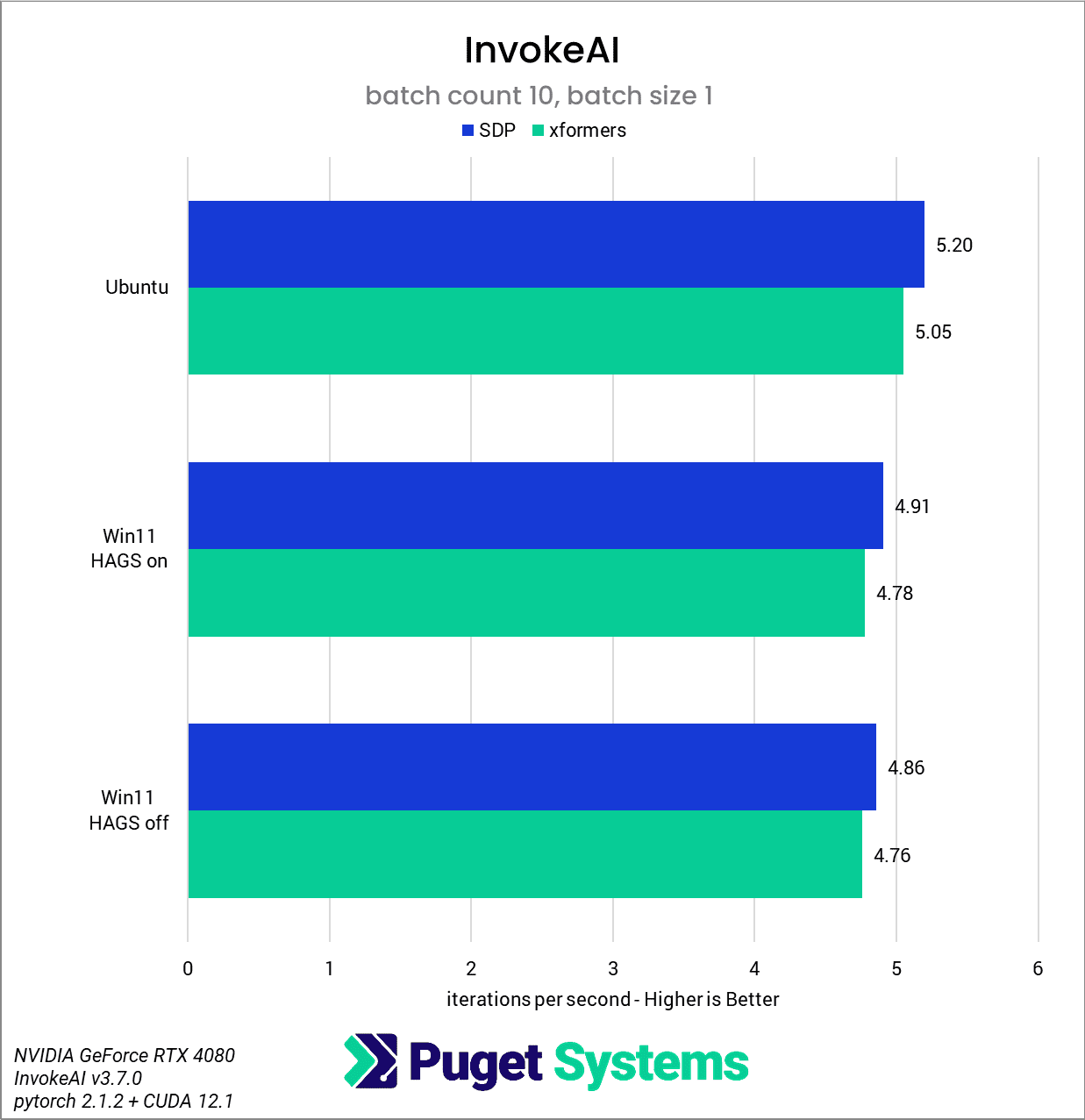

InvokeAI

Like Fooocus, InvokeAI does not support batch sizes larger than one, so only one set of results is provided here as well. As with the previous results, the Ubuntu + SDPA combination comes out ahead overall. Interestingly, this is one of the few examples where disabling HAGS slightly negatively impacted performance.

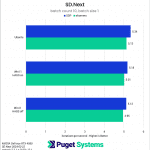

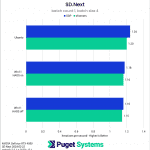

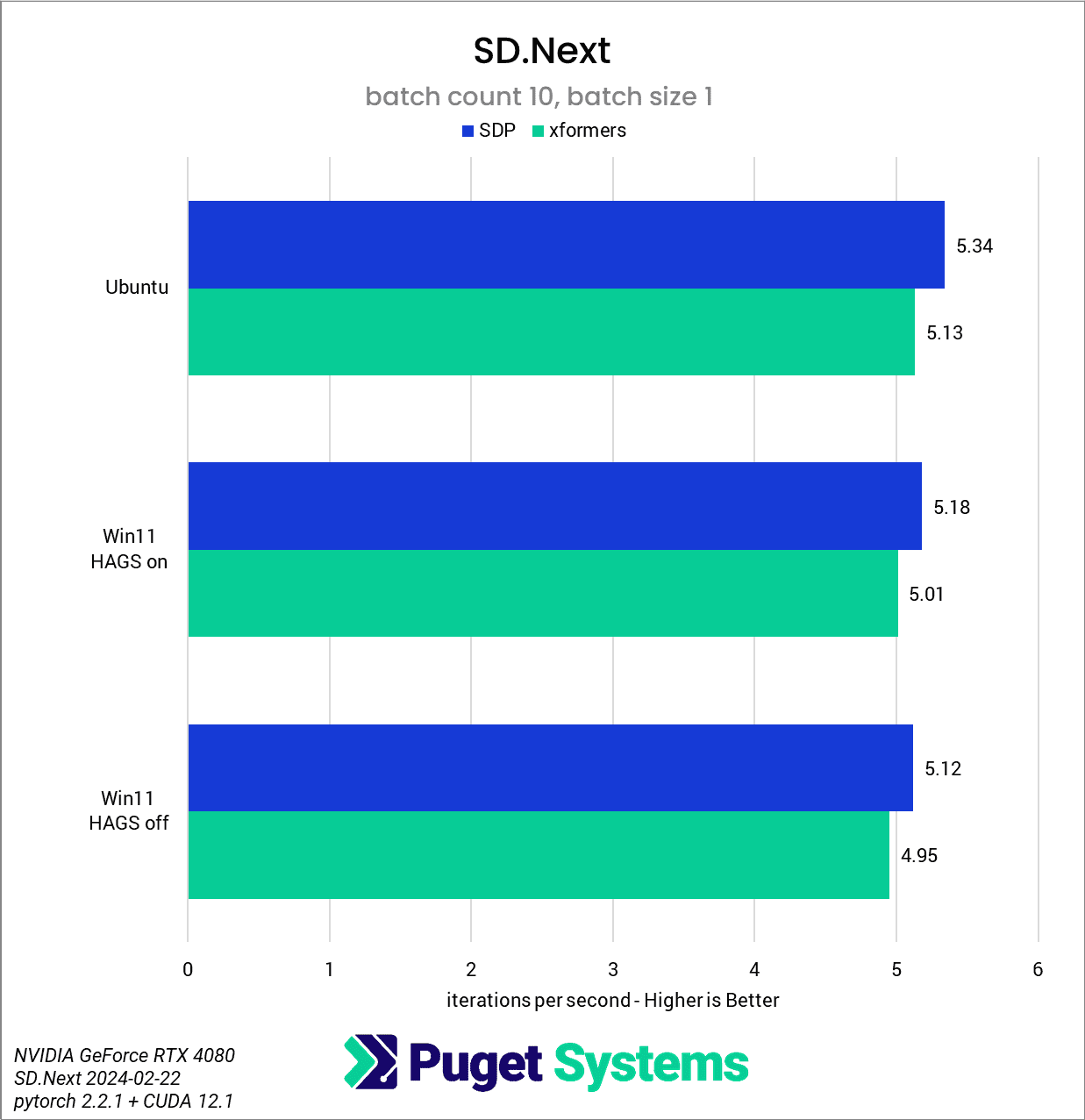

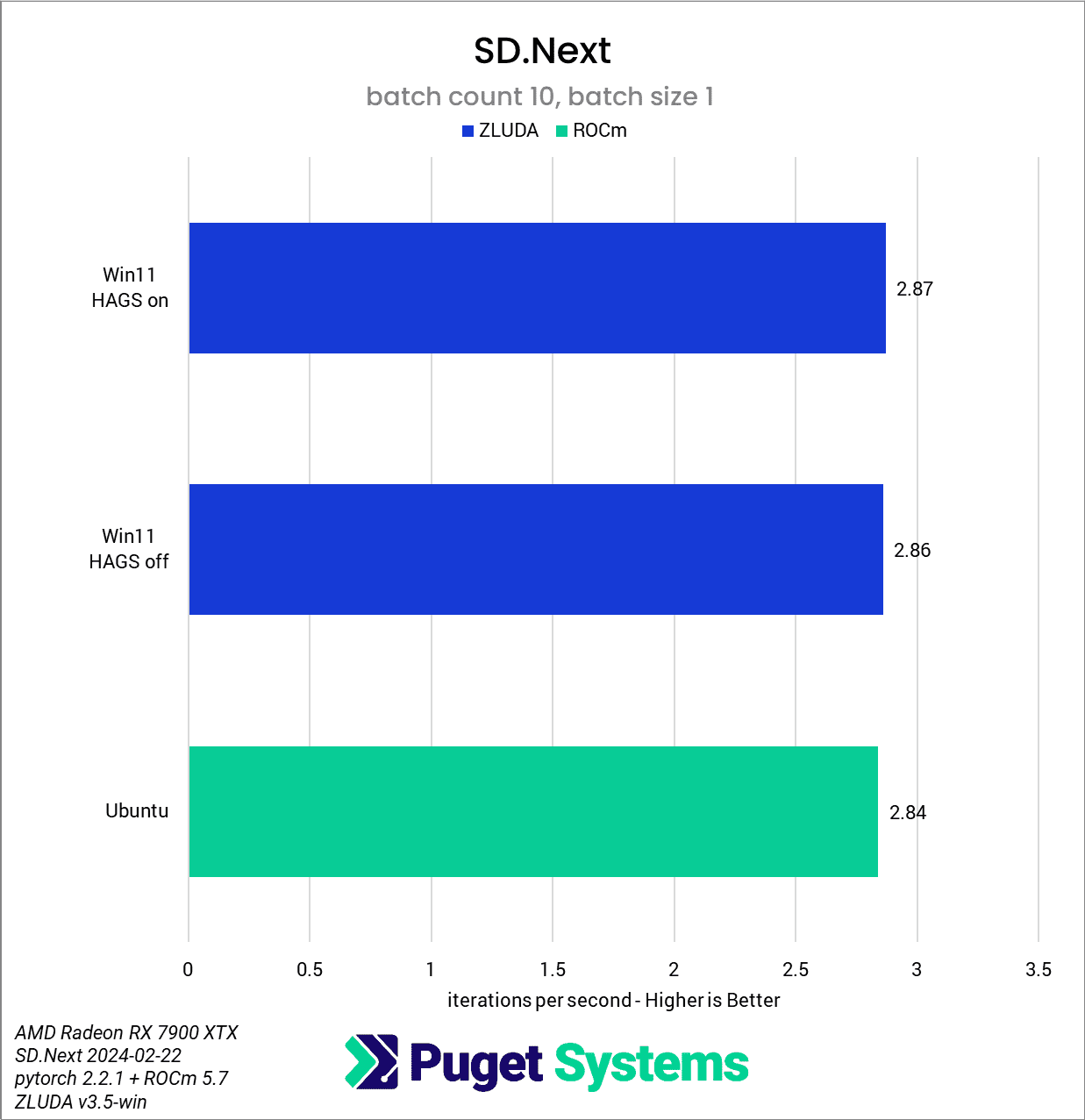

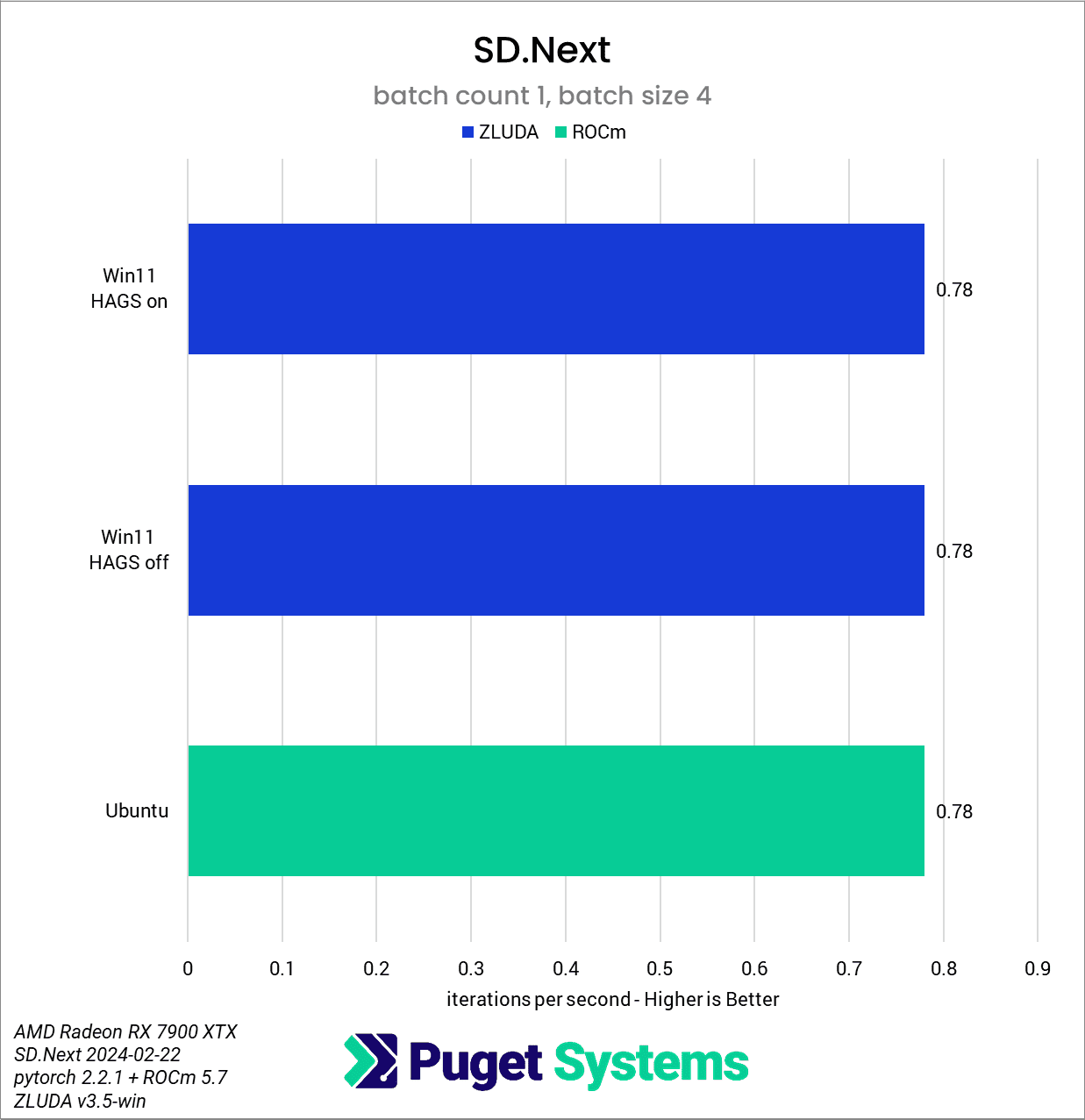

SD.Next

Finally, the SD.Next results once again show that Ubuntu with SDPA is the most performant option overall. However, just like the InvokeAI results above, we found that disabling HAGS slightly decreased performance.

Looking at the AMD results (charts 3-4), we again find Ubuntu + ROCM 5.7 trailing slightly behind ZLUDA within Windows, but only by about 1%. The HAGS on test did report a slightly higher speed, but by less than a single percentage point, further establishing that HAGS has little impact on ZLUDA performance.

Conclusion

Throughout our testing of the NVIDIA GeForce RTX 4080, we found that Ubuntu consistently provided a small performance benefit over Windows when generating images with Stable Diffusion and that, except for the original SD-WebUI (A1111), SDP cross-attention is a more performant choice than xFormers. Additionally, our results show that the Windows 11 default setting of HAGS-enabled is typically not the best option for performance. However, the performance impact of leaving HAGS enabled is generally minor and may be the better choice in some applications, such as SD.Next and InvokeAI.

As long as the ZLUDA project remains available, we found that owners of the AMD Radeon RX 7900 XTX can expect similar performance between Ubuntu/ROCm and Windows/ZLUDA, with the HAGS setting in Windows having little to no effect on image generation speeds.

That said, even with the most generous comparison between the two, Ubuntu only provided a performance gain of about 9.5%, with most examples falling to around a 5% or smaller improvement. This means the performance argument for Ubuntu over Windows may not overcome the typical arguments against a switch from Windows, such as software compatibility. Yet the benefit of reduced VRAM usage could tip the scales towards Linux for less powerful GPUs with smaller amounts of VRAM. However, anyone looking to achieve the absolute fastest possible image generation speeds using Stable Diffusion should look beyond Windows 11.