Table of Contents

Introduction

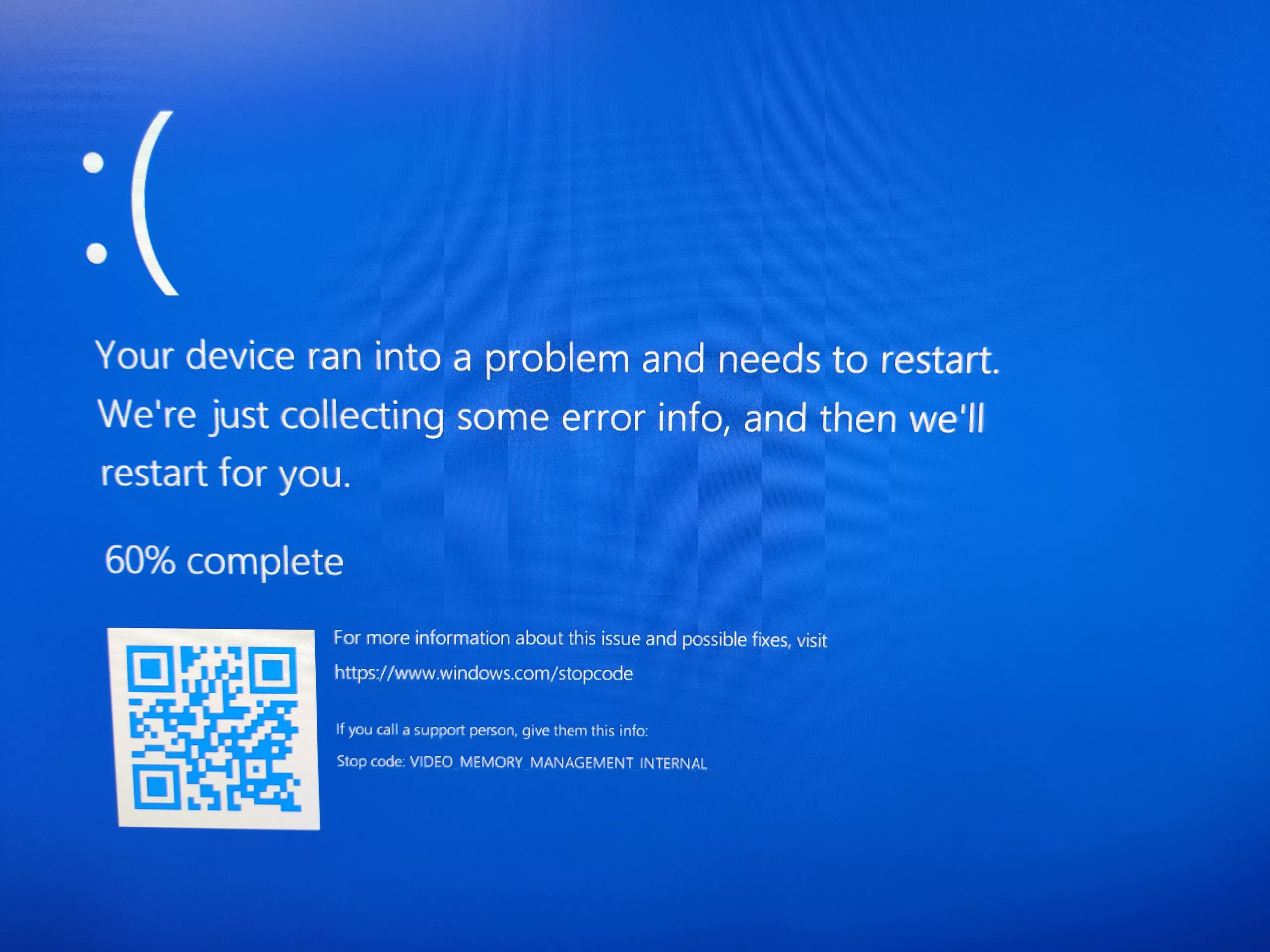

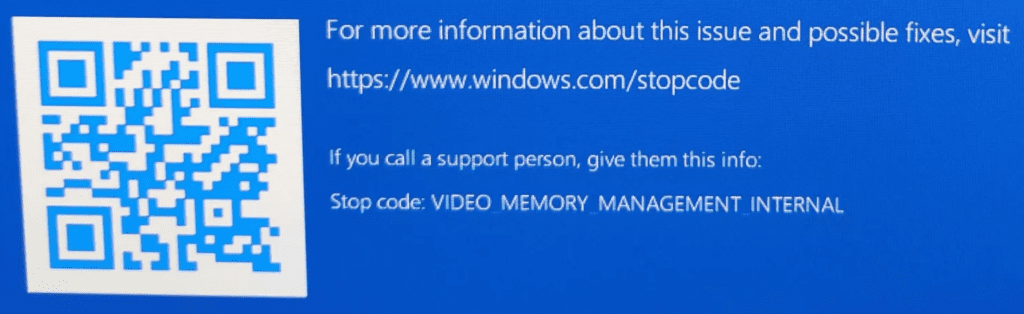

We have discovered what appears to be a bug within recent Nvidia driver packages that is leading to hard-locks or VIDEO_MEMORY_MANAGEMENT_INTERNAL blue-screens in certain rendering and encoding workflows.

Issue

When using an affected driver and the graphics card’s VRAM is 100% utilized, systems consistently freeze or blue-screen. So far, our testing has shown that the blue-screens point to either “watchdog.sys” or “dxgmms2.sys”.

Limited testing with AMD GPUs indicates that Adrenalin drivers v23.1.1 are not affected by this issue.

This issue presents most commonly while using Cinema4D/Redshift and After Effects. It can happen with Premiere Pro as well, however the most important factor is not the application, it’s whether the system hits 100% VRAM usage, which is easier to do if using multi-cam edits or utilizing numerous plugins/effects.

Solution

We have recently identified a driver version that appears to resolve the VMMI BSOD. We have tested on several configurations, and so far, everything appears to be stable and operational. While there are no patch notes or Nvidia driver release notes indicating an intentional fix, we are unable to reproduce a failure at this time. Hopefully that doesn’t change, but once you’re on the recommended driver, it is wise to avoid driver updates until you can be certain they won’t reintroduce the crash.

The following drivers are currently fully operational:

Nvidia Studio Driver for GeForce – 535.98

Nvidia New Feature Branch for Quadro – 535.98

Workarounds

We believe the issue has been resolved by the drivers listed above, if you continue to experience BSODs please utilize the workarounds below

We’ve reported the issue to Nvidia, but while we await a fix, we have some suggestions for preventing these crashes if you’ve been impacted by this problem.

- The latest GeForce driver that appears to not have this issue is 512.96, so rolling back to this driver may be a short-term solution. However, this driver is not compatible with 40-series GPUs, so it appears that all 40-series drivers currently available are affected.

- There are more options in the RTX / Quadro / Professional space, due to the various driver branches available. So far, testing has shown that both R510 U10 (514.08) and R470 U12 (474.14) appear unaffected by this issue.

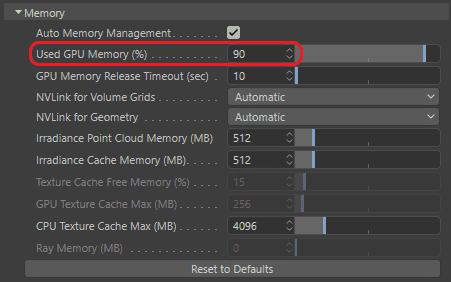

- Applications may have options for manually adjusting VRAM usage. A common workflow that leads to crashes is concurrent use of Cinema4D+Redshift and After Effects, because by default, the Redshift renderer will reserve a large amount of VRAM for itself. Lowering the “Used GPU Memory %” within the “Render Settings” menu, under the “Memory” subsection, may help prevent the crash.

- If you are unable to use the drivers above, then you should consider what steps you can take to reduce your VRAM usage. Disabling plugins, reducing effects, and lowering project resolutions are common methods, and even disconnecting secondary monitors can help too.

- There are some indications that disabling “Hardware-accelerated GPU scheduling” within Windows can help prevent these BSODs as well, particularly in Adobe applications.

- In the worst case, you may need to resort to using CPU or software rendering/encoding options instead of utilizing the Nvidia GPU. This will greatly impact performance, but it may allow you to finish a project in a pinch.

Update for Redshift users

On April 21st, the founder and CTO of Redshift posted an update on their forums about their progress (or lack thereof) towards a fix with Nvidia. Due to the delays in reaching a resolution for these driver issues, they are moving forward with a potential workaround in an upcoming release (3.5.15). We hope that this new version will improve the poor IPR performance and BSODs that have been affecting Redshift users ever since these drivers were released last year.

Unfortunately, at this time, we do not have any new information regarding fixes or workaround for other creative workflow applications.

Conclusion

It seems that a number of Nvidia graphics drivers released this year are leading to systems freezing and crashing when VRAM usage reaches 100%. Users have the option of using older drivers depending on their GPU, but others that are limited to using affected drivers may need to make workflow adjustments while we await an update from Nvidia. Rolling back to GeForce driver version 512.96 seems to resolve the issue, if you’re unable to roll back it’s time to review the workflow and program settings to see if there is a better solution. As always, please reach out to Puget Systems Support if you identify a hardware failure.

Need help with your Puget Systems PC?

If something is wrong with your Puget Systems PC. We are readily accessible, and our support team comes from a wide range of technological backgrounds to better assist you!

Looking for more support guides?

If you are looking for a solution to a problem you are having with your PC, we also have a number of other support guides that may be able to assist you with other issues.